Do you want to share your website’s public content with AI robots for Generative AI or other Artificial intelligence for AI Training? If No, then this article is for you.

Disclaimer:

The insights in this article are based on information available at the time of writing. As the digital landscape evolves, so may the relevance of this advice. I encourage you to do your research to stay informed. I’m not liable for any changes after publication or the content of external links.

In the past few days, there has been a rumour about Tumblr and WordPress.com being in talks about a deal with AI companies like OpenAI and Midjourney. The deal is about the blog’s content for AI Training. For now, there is no official statement from any of the companies, according to TheVerge

WordPress ecosystem has two sides.

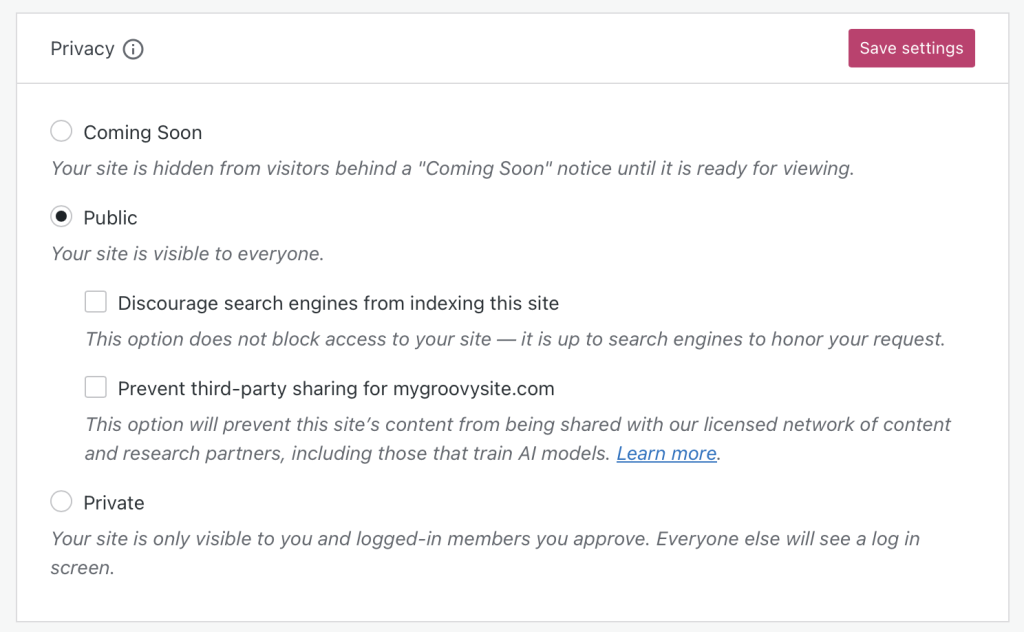

One is WordPress.com, run by Automattic, which has a privacy option that disallows AI bots to parse the data. Read more about the Privacy Options.

Those who do not use it might have already shared or will share the data with AI Training.

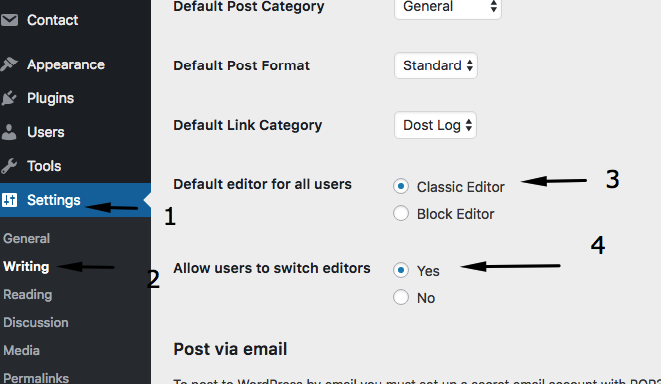

The second side is WordPress.org, which the WordPress Foundation manages. Self hosted WordPress has no privacy options for the bots yet.

I’m a self-hosted WordPress user, I don’t worry about it. But if you think it’s important to protect your data from AI Bots, you can use the following two ways.

- Using robots.txt

If you have ever updated robots.txt, use Darkvisitors robots.txt builder to generate AI bots-related agent data and update your robots.txt to add one layer of protection from bots to your site.

- Using ai.txt

ai.txt is specific to AI Bots and works on the same mechanism as robots.txt. Check out the Spawning ai.txt generator that restricts or permits using your site’s content for AI training.

In my opinion, anything published to the public should be open to all, whether it be a bot or a human. In the end, humans are using Generative AI for various activities.

Happy Blogging 😉